In the past few years, the technology sector has been plagued by recruitment concerns, including post-interview blurriness and bait-and-and-switch methods that damage both ends of a job lined up. Deepfake interviewees, on the other hand, pose a new threat, according to the FBI.

According To FBI

As per the agency’s most recent PSA, many people are using deepfakes to assume the identities of others in order to get remote employment. Initially, the offender gathers enough confidential info on the victim to be able to credibly seek employment as that individual. For the next step, they get their hands on a few high-resolution images of the subject, either via theft or an internet search. For video interviews, the malicious person creates and deploys a deepfake that looks like the real thing using images and occasionally voice impersonating.

Jobs with exposure to “highly sensitive knowledge,” “financial data, business IT systems and/or privileged data” are common targets for impersonating attempts, according to the FBI. A company’s finances, the stock market, competitive goods, and services, or vast quantities of confidential information may all be jeopardized if such information was leaked.

Miscreants may not wish to stay in their wrongfully-won position long-term, but they may also want to make US dollars from outside the country or relish the privileges connected with a career they otherwise wouldn’t be able to attain. Perhaps the impersonations are part of an even broader plot that might jeopardize national security.

We Don’t Know If they Get Caught

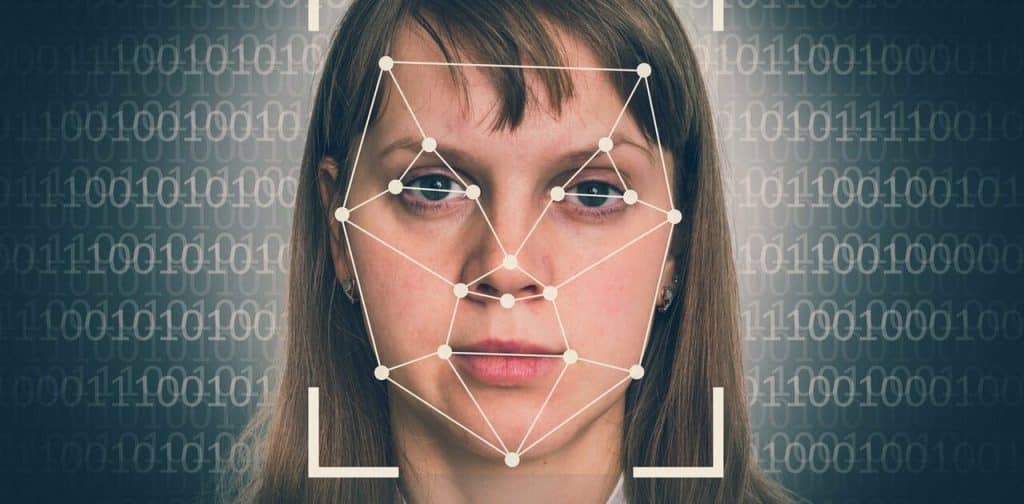

Currently, it’s not known whether job candidates who impersonate others during an application are ever detected. In contrast to the two-way discourse characteristic of a hiring process, deepfakes tend to be one-directional rather than two-way. In an ideal world, a deepfake interlocutor would be detectable even to the unaided observer.

As for the rarely rushed employer, who may not notice an unnerving delay in the video feed or mistake it for an internet problem, there’s also something to be argued for that. As a result, the “ideal” criminal opportunity may be created by combining technological competence with a little chance.

Despite the lack of precise advice from the FBI, the agency does caution that employers should be on the lookout for mismatched aural and visual cues. In these conversations, “the motions and mouth motion of [the interviewee] on-camera does not properly synchronize with the sound,” states a PSA. “At times, activities such as laughing, snorting, or other aural actions do not match what is seen visually.”