What Are AMD’s Instinct AI Accelerators or AMD Instinct Processors? This is one of the biggest questions plaguing the GPU space. AMD’s Instinct AI accelerators have emerged as a formidable rival to NVIDIA’s dominance in the parallel computing space. While NVIDIA has been renowned for its popular GPU series, AMD’s Instinct AI accelerators have gained attention by equipping the massive supercomputers Frontier and El Capitan.

Additionally, the open-source ROCm platform developed by AMD has garnered growing support from the community, further intensifying the competition between the two. But what exactly are AMD’s Instinct AI accelerators, and what sets them apart in terms of power and performance when compared to NVIDIA’s Tensor GPUs?

Contents

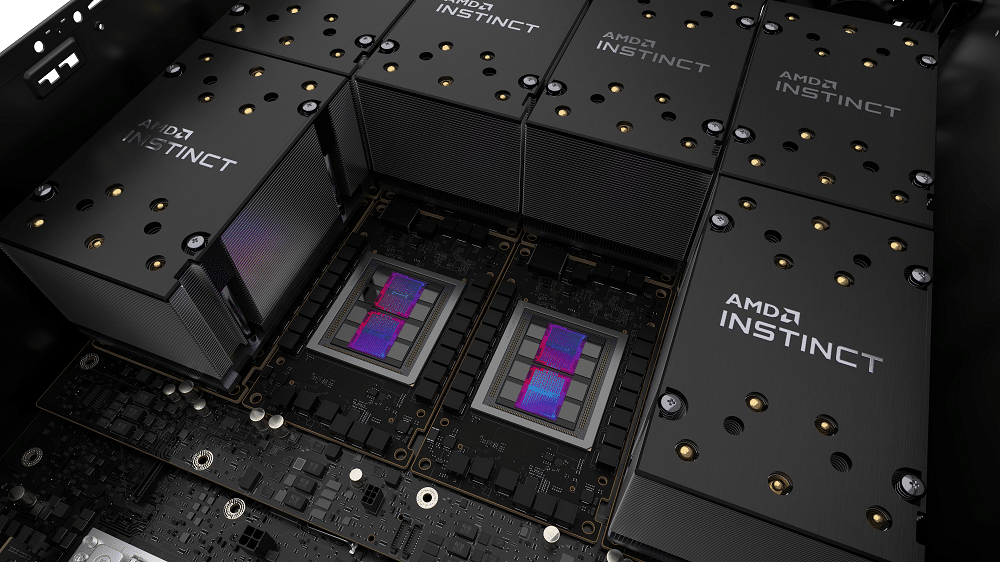

What Is an AMD Instinct Processor?

AMD’s Instinct processors are no ordinary GPUs. They are high-performance, enterprise-grade hardware designed specifically for demanding tasks in AI acceleration and high-performance computing (HPC). Unlike consumer-grade GPUs, the Instinct GPUs are purpose-built and come with specialized features and optimizations that make them ideal for handling AI learning and other compute-intensive workloads.

One remarkable achievement of AMD’s Instinct series is its involvement in powering the world’s first supercomputer to break the Exascale barrier. This groundbreaking system operates at an astounding 1.1 ExaFLOPs, delivering exceptional performance in double-precision operations per second. This level of computing power opens up exciting possibilities for tackling complex challenges in various fields.

In fact, supercomputers equipped with AMD’s Instinct GPUs are currently being employed in critical research areas such as cancer treatments, sustainable energy, and climate change.

AMD Instinct Processors Is Nuclear

Certainly! AMD Instinct Processors have undergone significant technological upgrades and innovations to enable Exascale-level processing in mainstream servers and supercomputers. Let’s delve into some of the key advancements in technology used in AMD Instinct GPUs:

DNA Architecture: AMD’s Instinct GPUs are based on the CDNA (Compute DNA) architecture. This architecture is specifically designed to optimize performance for high-performance computing and AI workloads. It incorporates a range of enhancements, including increased cache sizes, improved memory bandwidth, and optimized compute units.

Infinity Fabric Technology: AMD’s Instinct accelerators leverage Infinity Fabric technology, which provides high-speed interconnectivity between different components within the GPU. This technology enhances data transfer rates, reduces latency, and ensures efficient communication between the various processing units.

High Bandwidth Memory (HBM): Instinct GPUs utilize High Bandwidth Memory, a cutting-edge memory technology that offers high-speed data access and transfer. HBM enables faster and more efficient memory access, enhancing overall GPU performance and reducing bottlenecks.

Advanced Matrix Operations: The Instinct accelerators have specialized hardware and instructions for matrix operations commonly used in deep learning and other AI workloads. These dedicated resources enable efficient processing of matrix computations, improving AI performance and accelerating training and inference tasks.

Optimized Software Stack: AMD’s Instinct GPUs are supported by an optimized software stack, including ROCm (Radeon Open Compute). ROCm is an open-source platform that provides a comprehensive software ecosystem for HPC and AI workloads. It includes optimized drivers, libraries, and development tools, enabling developers to harness the full potential of Instinct accelerators.

Instinct AI Accelerators Vs. Radeon GPU AI Accelerators?

AMD has got you covered with its Instinct and Radeon GPU lineups, tailored for different needs. When it comes to enterprise applications, AMD’s Instinct GPUs take the stage, boasting the powerful CDNA architecture, HBM memory, and Infinity Fabric interconnect. On the other hand, the Radeon GPUs, built with RDNA architecture, DDR6 memory, and Infinity Cache, cater to regular consumers’ demands.

While the Radeon series may not match the Instinct GPUs in terms of raw power, they still pack a punch. These AI accelerators feature one or two AI accelerator cores per compute unit, making them capable of handling demanding AI workloads. Just take a look at the latest Radeon RX7900 XT GPU, equipped with two AI accelerator cores per compute unit, delivering an impressive peak performance of 103 TFLOPs in half-precision and 52 TFLOPs in single-precision computing.

While the Instinct GPUs shine in tasks like large language models (LLMs) and high-performance computing (HPC), the Radeon AI accelerators excel in other areas. They are a great fit for fine-tuning pre-trained models, inference tasks, and graphics-intensive applications.